|

I am a fourth-year Ph.D. candidate in Computer Engineering at Northeastern University, USA, where I am part of the SMILE Lab, fortunate to be advised by Professor Yun Raymond Fu (ACM/IEEE/AAAI Fellow). My research advances generative AI for computer vision, including Visual Language Models, Video Understanding & Generation, World Models, and Trajectory & Motion Prediction/Planning. I am particularly interested in bridging these areas to enable token-efficient dense video understanding, AI-driven content creation, and autonomous systems or robotics. Prior to my Ph.D., I obtained my M.Sc. degree from Zhejiang University (ZJU). During my graduate studies, I was also a visiting student and remote intern at The Chinese University of Hong Kong (CUHK) and The University of California, San Diego (UCSD). Beyond academia, I have industry experience in both applied and fundamental AI research. I am currently a Research Scientist Intern at Meta Reality Labs Research (Sep–Dec 2025), working on world models and VLMs. Previously, I worked with LinkedIn (Video AI, Summer 2025) on VLMs and recommendation, and was an Applied Scientist Intern at Amazon AWS AI Lab (Summer 2024) on video understanding and video large language models. Earlier, I was a research intern at Tencent (腾讯), focusing on generative models for images and videos. I am actively seeking a research internship for Spring/Summer 2026 and open to collaborations. If you are interested in working together, feel free to reach out! Email Me / Twitter / LinkedIn / GitHub / Hugging Face / GoogleScholar / CV |

|

2025.09: Our paper VQToken(Extreme Token Reduction) has been accepted to NeurIPS 2025. 2025.08: I joined Meta as a Research Scientist Intern under Reality Labs Research. 2025.05: I joined Microsoft’s LinkedIn Video AI as a Research Intern in Video GenAI. 2024.05: I joined Amazon AWS AI Labs as an Applied Scientist Intern this summer. 2024.02: Our paper "Out-of-Sight Trajectory Prediction" has been accepted at CVPR. 2023.09: I received the ACM SIGMM travel award. 2023.08: Our paper "Layout Sequence Prediction From Noisy Mobile Modality" has been accepted at ACM MM. 2022.09: I joined SMILE Lab at Northeastern University. |

|

My research lies in Computer Vision and Artificial Intelligence. Aims to explore the potential of GenAI for Video LLMs, World Models, and Motion Prediction &Planning. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

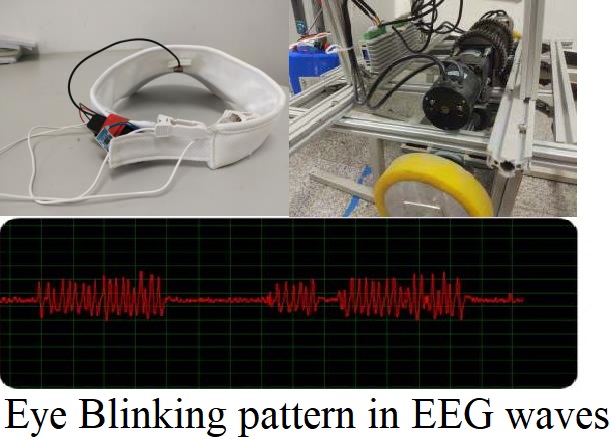

Several years ago, I delved into the fascinating world of sensor modalities and signal processing, sparking a keen interest in embedded platforms. That experience led me to explore further into artificial intelligence and computer vision. |

|

Provincial Grand Prize at the Challenge Cup Competition of Science Achievement in China Proposed to detect eye blink EMG noise mixed in EEG signal, which uses the intense eye blink signal to control the direction of wheelchairs, while analysis EEG to predict tension and relaxation degree to control the speed of the wheelchair. An affordable solution for paralyzed patients to control their wheelchairs and move independently. |

|

National First Prize at National Biomedical Engineering Innovative Design Competition Responsible for developing upper computer software which received and filtered signals in the spectral domain from the MSP430 PCB board and developing an algorithm to detect the abnormal ECG. |

|

First Prize at Mobile Application Innovation Contest of North China Responsible for programming the embedding microprocessor to sample the analog signal of the bending sensor on the gloves, which is used to predict the sign language, and showing prediction results on the app. |

|

Summer 2015 Responsible for programming the embedding microprocessors to control the mechanical structure and developing upper machine software to detect the kind of paper money in traditional image processing method, then sort them. |

|

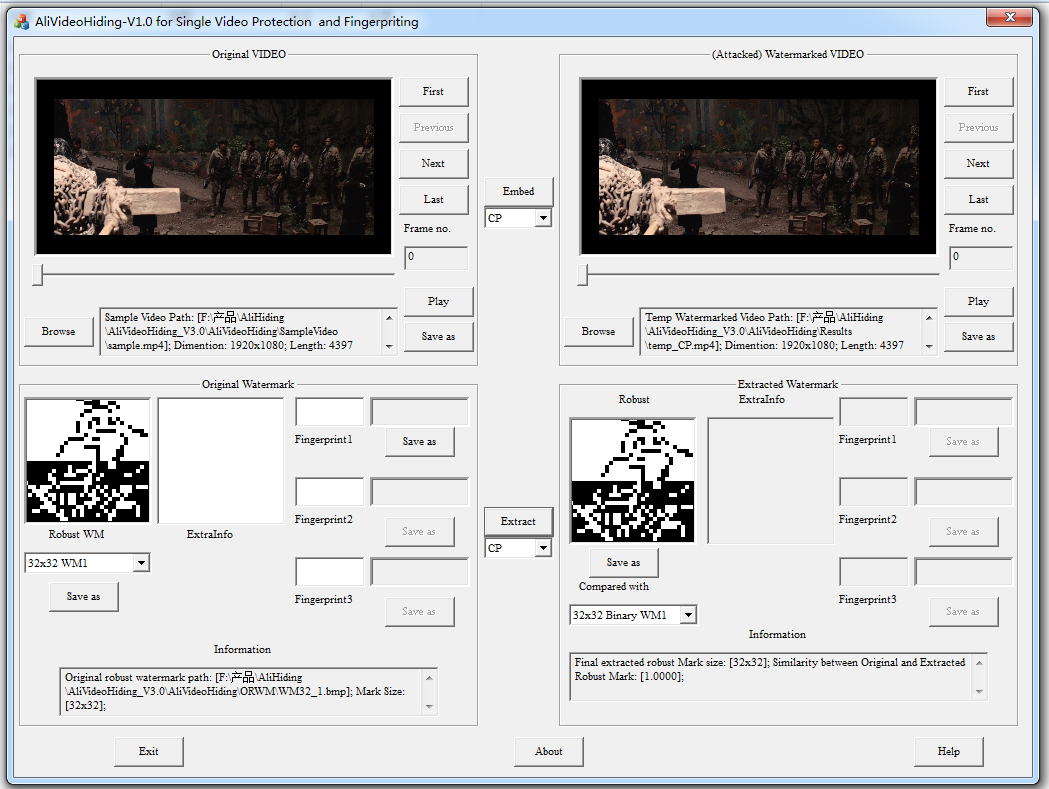

Alibaba-ZJU Joint Research Institute of Frontier Technologies Research Project Responsible for developing C++ software "Shared Memory Based Code Hiding Platform. Particpated in video stream watermarking algorithm. |

|

Meta | Reality Labs Research, Redmond, WA |

|

|

LinkedIn | Video AI, Mountain View, CA |

|

|

Amazon | AWS AI Lab, Bellevue, WA |

|

|

Toyota InfoTech Lab, Mountain View, CA |

|

|

Tencent, Shanghai, China |

|

|

SMILE Lab, Northeastern University, Boston |

|

|

University of California, San Diego |

|

|

the Chinese University of Hong Kong, Shenzhen |

|

|

Zhejiang University, Hangzhou China |

|

| NeurIPS Scholar Award | |

| ACM MM Travel Grant Award | ACM SIGMM |

| National Biomedical Engineering Innovative Design Competition | National First Prize |

| Challenge Cup Competition of Science Achievement in China | Provincial Grand Prize |

| Mobile Application Innovation Contest of North China | Provincial First Prize |

| 'Holtek cup' microcontroller application and design competition, Tianjin (6/453, < 1.3%) | Provincial First Prize |

| Tianjin IOT Innovation and Engineering Application Design Competition | Provincial First Prize |

| Tianjin Undergraduate Robotics Competition | Provincial First Prize |

| Tianjin International Student Internet Innovation and Entrepreneurship Competition | Provincial Second Prize |

| Northern China Robotics Competition | Provincial Second Prize |

• ES-Reasoning Workshop @ ICLR

• Conference: NeurIPS’23’24’25, IJCAI’25, MM’24, ICLR’24, ECCV’24, ICCV’25, ICML’25, AISTATS’24, WACV’25

• Journal: TPAMI, TIP, PR, TKDD